From: Raoul Scarazzini <rasca@redhat.com>

Sent: Wednesday, August 24, 2016 2:56 AM

To: Boris Derzhavets; Wesley Hayutin; Attila Darazs

Cc: rdo-list

Subject: Re: [rdo-list] Instack-virt-setup vs TripleO QuickStart in regards of managing HA PCS/Corosync cluster via pcs CLI

Sent: Wednesday, August 24, 2016 2:56 AM

To: Boris Derzhavets; Wesley Hayutin; Attila Darazs

Cc: rdo-list

Subject: Re: [rdo-list] Instack-virt-setup vs TripleO QuickStart in regards of managing HA PCS/Corosync cluster via pcs CLI

What I can say for sure at the moment is that it's really hard to follow

your quoting :)

Still some info are missing:

- Which version are you using? The latest? So are you installing from

master?

>

No idea. My actions :-

instack-virt-setup?

>

No the versions are different ( points in delorean.repos differ )

Details here :-

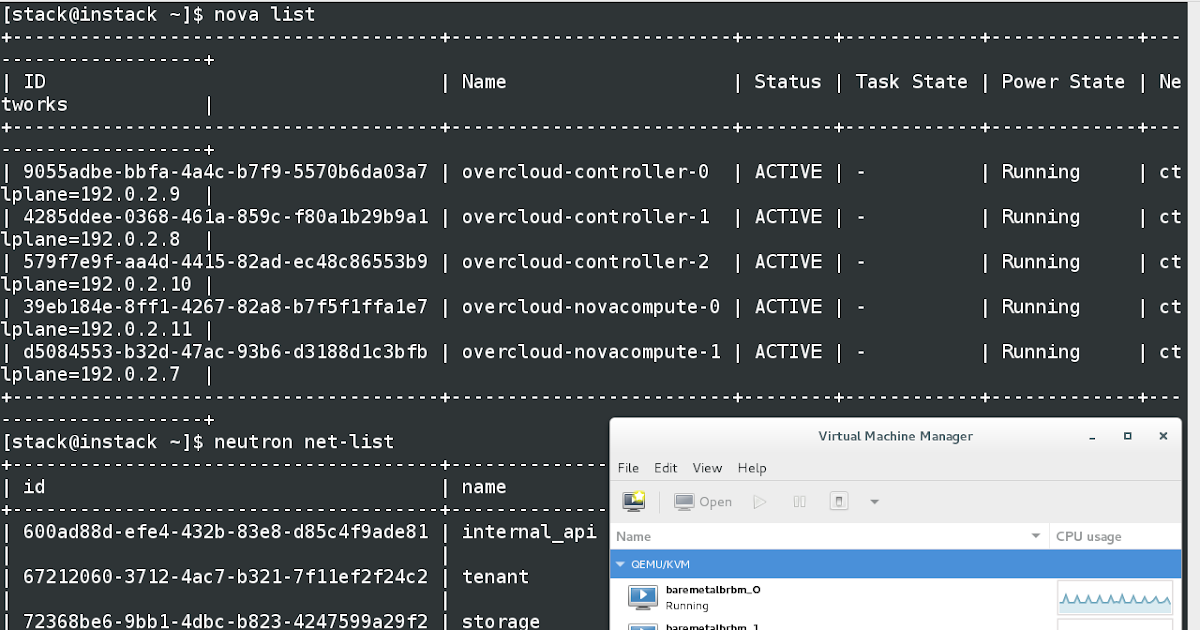

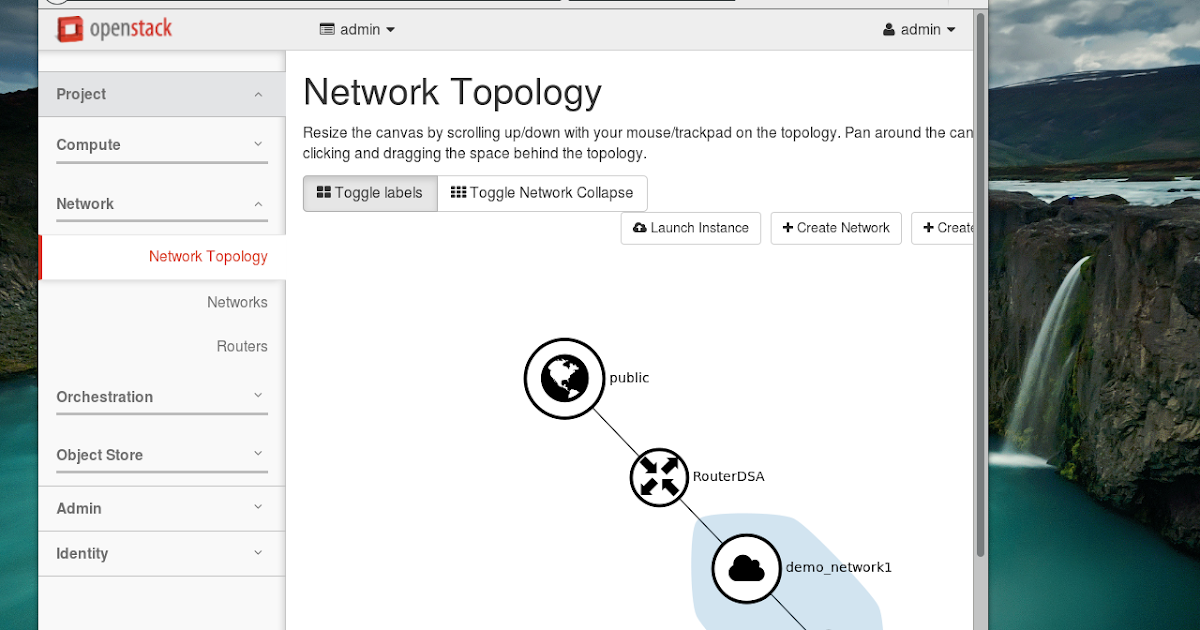

http://bderzhavets.blogspot.ru/2016/07/stable-mitaka-ha-instack-virt-setup.html

- Do we have some logs to look at?

>

Sorry, it was not my concern.

My primary target was identify sequence of steps

which brings PCS Cluster to proper state with 100% warranty.

Again, I think here we're hitting a version issure.

>

I tested QuickStart ( obtained just at the same time on different box)

delorean.repo files in instack-virt-setup build of VENV

It doesn't change much final overcloud cluster behavior.

I am aware of this link is outdated ( due to Wesley Hayutin respond )

https://bluejeans.com/s/a5ua/

However , my current understanding of QuickStart CI ( not TripleO CI ) is based on this video

I believe the core reason is the way how Tripleo QS assembles undercloud for VENV build.

It makes HA Controllers cluster pretty stable and always recoverable (VENV case)

It is different from official way at least in meantime.

I've already wrote TripleO QS nowhere issues :-

$ openstack overcloud images build --all ===> that is supposed to be removed

as far as I understand TripleO Core team intend.

>

Boris.

Thanks,

--

Raoul Scarazzini

rasca@redhat.com

On 23/08/2016 19:42, Boris Derzhavets wrote:

>

>

>

> ------------------------------------------------------------------------

> *From:* Raoul Scarazzini <rasca@redhat.com>

> *Sent:* Tuesday, August 23, 2016 11:29 AM

> *To:* Boris Derzhavets; Wesley Hayutin; Attila Darazs

> *Cc:* rdo-list

> *Subject:* Re: [rdo-list] Instack-virt-setup vs TripleO QuickStart in

> regards of managing HA PCS/Corosync cluster via pcs CLI

>

> Hi Boris,

> so, for what I see the pcs commands that stops and starts the cluster on

> the rebooted node should not be used. It can happen that a service fails

> to start but we need to investigate why from the logs.

>

> Remember that cleaning up resources can be useful if we know what

> happened, but using it repeatedly makes no sense. In addition remember

> that you can use just "pcs resource cleanup" to cleanup the entire

> cluster status and in some way "start from the beginning".

>

> Now, about this specific problem we need to understand what is happening

> here. Correct me if I'm wrong:

>

> 1) We have a clean env in which we reboot a node;

> That is correct

>

> 2) The nodes comes up, but some resources fails;

> All resources fail

>

> 3) After some cleanups the env becomes clean again;

>

> a) If VENV is setup by instack-virt-setup ( official guide )

> Mentioned script start.sh works right a way . It comes as well

> from official guide.

>

> b) if VENV is setup by Tripleo QuickStart ( where undecloud.qcow2

> gets uploaded

> to libvirt pool already having overcloud images integrated per

> Jon's Video explanation

> QuickStart CI vs Tripleo CI )

> then ( via my experience ) before attempting start.sh I

> MUST restart PCS Cluster

> on bounced Controller-X , then invoke `. ./start.sh` ( not

> simply ./start.sh )

> Pretty often second run start.sh is required from another

> controller-Y.

> Some times I cannot fix it in script mode and have manually

> run commands giving

> delay more the 10 sec. So finally ( about 25 tests passed) I

> get `pcs status` OK.

> In other words all service are up and running on every

> controller-X,Y,Z

>

> Details :-

> http://bderzhavets.blogspot.ru/2016/08/emulation-rdo-triple0-quickstart-ha.html

>

> <http://bderzhavets.blogspot.ru/2016/08/emulation-rdo-triple0-quickstart-ha.html>

>

> Xen Virtualization on Linux and Solaris: Emulation Triple0 QuickStart HA

> Controller's Cluster failover

> <http://bderzhavets.blogspot.ru/2016/08/emulation-rdo-triple0-quickstart-ha.html>

> bderzhavets.blogspot.ru

>

> Is this the sequence of operations you are using? Is the problem

> systematic and can we reproduce it?

>>

> YES

>>

> Can we grab sosreports from the

> machine involved?

>>

> Instruct me how to do this ?

>>

> Most important question: which OpenStack version are

> you testing?

>>

> Mitaka stable :-

>

> [tripleo-quickstart@stack] $ bash quickstart --config ./ha.yml $VIRTHOST

> By default , no --release specified Mitaka Delorean trunks get selected

> Just check|/etc/yum.repos.d/| for delorean.repos

> quickstart places on undercloud when it exits asking to to connect to

> undercloud

>>

> Boris

> --

> Raoul Scarazzini

> rasca@redhat.com

>

>

> On 22/08/2016 13:49, Boris Derzhavets wrote:

>>

>> Sorry , for my English

>>

>> I was also keeping (not kept ) track on Galera DB via `clustercheck`

>>

>> either I just kept.

>>

>>

>> Boris

>>

>>

>> ------------------------------------------------------------------------

>> *From:* rdo-list-bounces@redhat.com <rdo-list-bounces@redhat.com> on

>> behalf of Boris Derzhavets <bderzhavets@hotmail.com>

>> *Sent:* Monday, August 22, 2016 7:29 AM

>> *To:* Raoul Scarazzini; Wesley Hayutin; Attila Darazs

>> *Cc:* rdo-list

>> *Subject:* Re: [rdo-list] Instack-virt-setup vs TripleO QuickStart in

>> regards of managing HA PCS/Corosync cluster via pcs CLI

>>

>>

>>

>>

>>

>> ------------------------------------------------------------------------

>> *From:* Raoul Scarazzini <rasca@redhat.com>

>> *Sent:* Monday, August 22, 2016 3:51 AM

>> *To:* Wesley Hayutin; Boris Derzhavets; Attila Darazs

>> *Cc:* David Moreau Simard; rdo-list

>> *Subject:* Re: [rdo-list] Instack-virt-setup vs TripleO QuickStart in

>> regards of managing HA PCS/Corosync cluster via pcs CLI

>>

>> Hi everybody,

>> sorry for the late response but I was on PTO. I don't understand the

>> meaning of the cleanup commands, but maybe it's just because I'm not

>> getting the whole picture.

>>

>>>

>> I have to confirm that fault was mine PCS CLI is working on TripeO

>> QuickStart

>> but requires pcs cluster restart on particular node which went down

>> via ` nova stop controller-X` and was brought up via `nova start

>> controller-X`

>> Details here :-

>>

>> http://bderzhavets.blogspot.ru/2016/08/emulation-rdo-triple0-quickstart-ha.html

>

> <http://bderzhavets.blogspot.ru/2016/08/emulation-rdo-triple0-quickstart-ha.html>

>

> Xen Virtualization on Linux and Solaris: Emulation Triple0 QuickStart HA

> Controller's Cluster failover

> <http://bderzhavets.blogspot.ru/2016/08/emulation-rdo-triple0-quickstart-ha.html>

> bderzhavets.blogspot.ru

>

>

>>

>> VENV been set up with instack-virt-setup doesn't require ( on bounced

>> Controller node )

>>

>> # pcs cluster stop

>> # pcs cluster start

>>

>> Before issuing start.sh

>>

>> #!/bash -x

>> pcs resource cleanup rabbitmq-clone ;

>> sleep 10

>> pcs resource cleanup neutron-server-clone ;

>> sleep 10

>> pcs resource cleanup openstack-nova-api-clone ;

>> sleep 10

>> pcs resource cleanup openstack-nova-consoleauth-clone ;

>> sleep 10

>> pcs resource cleanup openstack-heat-engine-clone ;

>> sleep 10

>> pcs resource cleanup openstack-cinder-api-clone ;

>> sleep 10

>> pcs resource cleanup openstack-glance-registry-clone ;

>> sleep 10

>> pcs resource cleanup httpd-clone ;

>>

>> # . ./start.sh

>>

>> In worse case scenario I have to issue start.sh twice from different

>> Controllers

>> pcs resource cleanup openstack-nova-api-clone attempts to start

>> corresponding

>> service , which is down at the moment. In fact two cleanups above start all

>> Nova Services && one neutron cleanup starts all neutron agents as well.

>> I was also kept track of Galera DB via `clustercheck`

>>

>> Thanks.

>> Boris

>>>

>>

>>

>> I guess we're hitting a version problem here: if you deploy the actual

>> master (i.e. with quickstart) you'll get the environment with the

>> constraints limited to the core services because of [1] and [2] (so none

>> of the mentioned services exists in the cluster configuration).

>>

>> Hope this helps,

>>

>> [1] https://review.openstack.org/#/c/314208/

>> [2] https://review.openstack.org/#/c/342650/

>>

>> --

>> Raoul Scarazzini

>> rasca@redhat.com

>>

>> On 08/08/2016 14:43, Wesley Hayutin wrote:

>>> Attila, Raoul

>>> Can you please investigate this issue.

>>>

>>> Thanks!

>>>

>>> On Sun, Aug 7, 2016 at 3:52 AM, Boris Derzhavets

>>> <bderzhavets@hotmail.com <mailto:bderzhavets@hotmail.com>> wrote:

>>>

>>> TripleO HA Controller been installed via instack-virt-setup has PCS

>>> CLI like :-

>>>

>>> pcs resource cleanup neutron-server-clone

>>> pcs resource cleanup openstack-nova-api-clone

>>> pcs resource cleanup openstack-nova-consoleauth-clone

>>> pcs resource cleanup openstack-heat-engine-clone

>>> pcs resource cleanup openstack-cinder-api-clone

>>> pcs resource cleanup openstack-glance-registry-clone

>>> pcs resource cleanup httpd-clone

>>>

>>> been working as expected on bare metal

>>>

>>>

>>> Same cluster been setup via QuickStart (Virtual ENV) after bouncing

>>> one of controllers

>>>

>>> included in cluster ignores PCS CLI at least via my experience (

>>> which is obviously limited

>>>

>>> either format of particular commands is wrong for QuickStart )

>>>

>>> I believe that dropping (complete replacing ) instack-virt-setup is

>>> not a good idea in general. Personally, I believe that like in case

>>> with packstack it is always good

>>>

>>> to have VENV configuration been tested before going to bare metal

>>> deployment.

>>>

>>> My major concern is maintenance and disaster recovery tests , rather

>>> then deployment itself . What good is for me TripleO Quickstart

>>> running on bare metal if I cannot replace

>>>

>>> crashed VM Controller just been limited to Services HA ( all 3

>>> Cluster VMs running on single

>>>

>>> bare metal node )

>>>

>>>

>>> Thanks

>>>

>>> Boris.

>>>

>>>

>>>

>>>

>>>

>>> ------------------------------------------------------------------------

>>>

>>>

>>>

>>> _______________________________________________

>>> rdo-list mailing list

>>> rdo-list@redhat.com <mailto:rdo-list@redhat.com>

>>> https://www.redhat.com/mailman/listinfo/rdo-list

>>> <https://www.redhat.com/mailman/listinfo/rdo-list>

>>>

>>> To unsubscribe: rdo-list-unsubscribe@redhat.com

>>> <mailto:rdo-list-unsubscribe@redhat.com>

>>>

>>>

your quoting :)

Still some info are missing:

- Which version are you using? The latest? So are you installing from

master?

>

No idea. My actions :-

$ rm -fr .ansible .quickstart tripleo-quickstart

$ git clone https://github.com/openstack/tripleo-quickstart

$ cd tripleo*

$ ssh root@$VIRTHOST uname -a

$ vi /config/general_config/ha.yml ==> to tune && save

$ bash quickstart.sh --config ./config/general_config/ha.yml $VIRTHOST

When I get prompt to login to undercloud

/etc/yum.repos.d/ contains delorean.repo ( on fresh undercloud QS build )

files pointing to Mitaka Delorean trunks.

Just check for yourself.

>

- Are the two versions the same while using quickstart and$ git clone https://github.com/openstack/tripleo-quickstart

$ cd tripleo*

$ ssh root@$VIRTHOST uname -a

$ vi /config/general_config/ha.yml ==> to tune && save

$ bash quickstart.sh --config ./config/general_config/ha.yml $VIRTHOST

When I get prompt to login to undercloud

/etc/yum.repos.d/ contains delorean.repo ( on fresh undercloud QS build )

files pointing to Mitaka Delorean trunks.

Just check for yourself.

>

instack-virt-setup?

>

No the versions are different ( points in delorean.repos differ )

Details here :-

http://bderzhavets.blogspot.ru/2016/07/stable-mitaka-ha-instack-virt-setup.html

|

bderzhavets.blogspot.ru

|

||

>

Sorry, it was not my concern.

My primary target was identify sequence of steps

which brings PCS Cluster to proper state with 100% warranty.

Again, I think here we're hitting a version issure.

>

I tested QuickStart ( obtained just at the same time on different box)

delorean.repo files in instack-virt-setup build of VENV

It doesn't change much final overcloud cluster behavior.

I am aware of this link is outdated ( due to Wesley Hayutin respond )

https://bluejeans.com/s/a5ua/

However , my current understanding of QuickStart CI ( not TripleO CI ) is based on this video

I believe the core reason is the way how Tripleo QS assembles undercloud for VENV build.

It makes HA Controllers cluster pretty stable and always recoverable (VENV case)

It is different from official way at least in meantime.

I've already wrote TripleO QS nowhere issues :-

$ openstack overcloud images build --all ===> that is supposed to be removed

as far as I understand TripleO Core team intend.

>

Boris.

Thanks,

--

Raoul Scarazzini

rasca@redhat.com

On 23/08/2016 19:42, Boris Derzhavets wrote:

>

>

>

> ------------------------------------------------------------------------

> *From:* Raoul Scarazzini <rasca@redhat.com>

> *Sent:* Tuesday, August 23, 2016 11:29 AM

> *To:* Boris Derzhavets; Wesley Hayutin; Attila Darazs

> *Cc:* rdo-list

> *Subject:* Re: [rdo-list] Instack-virt-setup vs TripleO QuickStart in

> regards of managing HA PCS/Corosync cluster via pcs CLI

>

> Hi Boris,

> so, for what I see the pcs commands that stops and starts the cluster on

> the rebooted node should not be used. It can happen that a service fails

> to start but we need to investigate why from the logs.

>

> Remember that cleaning up resources can be useful if we know what

> happened, but using it repeatedly makes no sense. In addition remember

> that you can use just "pcs resource cleanup" to cleanup the entire

> cluster status and in some way "start from the beginning".

>

> Now, about this specific problem we need to understand what is happening

> here. Correct me if I'm wrong:

>

> 1) We have a clean env in which we reboot a node;

> That is correct

>

> 2) The nodes comes up, but some resources fails;

> All resources fail

>

> 3) After some cleanups the env becomes clean again;

>

> a) If VENV is setup by instack-virt-setup ( official guide )

> Mentioned script start.sh works right a way . It comes as well

> from official guide.

>

> b) if VENV is setup by Tripleo QuickStart ( where undecloud.qcow2

> gets uploaded

> to libvirt pool already having overcloud images integrated per

> Jon's Video explanation

> QuickStart CI vs Tripleo CI )

> then ( via my experience ) before attempting start.sh I

> MUST restart PCS Cluster

> on bounced Controller-X , then invoke `. ./start.sh` ( not

> simply ./start.sh )

> Pretty often second run start.sh is required from another

> controller-Y.

> Some times I cannot fix it in script mode and have manually

> run commands giving

> delay more the 10 sec. So finally ( about 25 tests passed) I

> get `pcs status` OK.

> In other words all service are up and running on every

> controller-X,Y,Z

>

> Details :-

> http://bderzhavets.blogspot.ru/2016/08/emulation-rdo-triple0-quickstart-ha.html

|

bderzhavets.blogspot.ru

|

||

>

> <http://bderzhavets.blogspot.ru/2016/08/emulation-rdo-triple0-quickstart-ha.html>

>

> Xen Virtualization on Linux and Solaris: Emulation Triple0 QuickStart HA

> Controller's Cluster failover

> <http://bderzhavets.blogspot.ru/2016/08/emulation-rdo-triple0-quickstart-ha.html>

> bderzhavets.blogspot.ru

>

> Is this the sequence of operations you are using? Is the problem

> systematic and can we reproduce it?

>>

> YES

>>

> Can we grab sosreports from the

> machine involved?

>>

> Instruct me how to do this ?

>>

> Most important question: which OpenStack version are

> you testing?

>>

> Mitaka stable :-

>

> [tripleo-quickstart@stack] $ bash quickstart --config ./ha.yml $VIRTHOST

> By default , no --release specified Mitaka Delorean trunks get selected

> Just check|/etc/yum.repos.d/| for delorean.repos

> quickstart places on undercloud when it exits asking to to connect to

> undercloud

>>

> Boris

> --

> Raoul Scarazzini

> rasca@redhat.com

>

>

> On 22/08/2016 13:49, Boris Derzhavets wrote:

>>

>> Sorry , for my English

>>

>> I was also keeping (not kept ) track on Galera DB via `clustercheck`

>>

>> either I just kept.

>>

>>

>> Boris

>>

>>

>> ------------------------------------------------------------------------

>> *From:* rdo-list-bounces@redhat.com <rdo-list-bounces@redhat.com> on

>> behalf of Boris Derzhavets <bderzhavets@hotmail.com>

>> *Sent:* Monday, August 22, 2016 7:29 AM

>> *To:* Raoul Scarazzini; Wesley Hayutin; Attila Darazs

>> *Cc:* rdo-list

>> *Subject:* Re: [rdo-list] Instack-virt-setup vs TripleO QuickStart in

>> regards of managing HA PCS/Corosync cluster via pcs CLI

>>

>>

>>

>>

>>

>> ------------------------------------------------------------------------

>> *From:* Raoul Scarazzini <rasca@redhat.com>

>> *Sent:* Monday, August 22, 2016 3:51 AM

>> *To:* Wesley Hayutin; Boris Derzhavets; Attila Darazs

>> *Cc:* David Moreau Simard; rdo-list

>> *Subject:* Re: [rdo-list] Instack-virt-setup vs TripleO QuickStart in

>> regards of managing HA PCS/Corosync cluster via pcs CLI

>>

>> Hi everybody,

>> sorry for the late response but I was on PTO. I don't understand the

>> meaning of the cleanup commands, but maybe it's just because I'm not

>> getting the whole picture.

>>

>>>

>> I have to confirm that fault was mine PCS CLI is working on TripeO

>> QuickStart

>> but requires pcs cluster restart on particular node which went down

>> via ` nova stop controller-X` and was brought up via `nova start

>> controller-X`

>> Details here :-

>>

>> http://bderzhavets.blogspot.ru/2016/08/emulation-rdo-triple0-quickstart-ha.html

>

> <http://bderzhavets.blogspot.ru/2016/08/emulation-rdo-triple0-quickstart-ha.html>

>

> Xen Virtualization on Linux and Solaris: Emulation Triple0 QuickStart HA

> Controller's Cluster failover

> <http://bderzhavets.blogspot.ru/2016/08/emulation-rdo-triple0-quickstart-ha.html>

> bderzhavets.blogspot.ru

>

>

>>

>> VENV been set up with instack-virt-setup doesn't require ( on bounced

>> Controller node )

>>

>> # pcs cluster stop

>> # pcs cluster start

>>

>> Before issuing start.sh

>>

>> #!/bash -x

>> pcs resource cleanup rabbitmq-clone ;

>> sleep 10

>> pcs resource cleanup neutron-server-clone ;

>> sleep 10

>> pcs resource cleanup openstack-nova-api-clone ;

>> sleep 10

>> pcs resource cleanup openstack-nova-consoleauth-clone ;

>> sleep 10

>> pcs resource cleanup openstack-heat-engine-clone ;

>> sleep 10

>> pcs resource cleanup openstack-cinder-api-clone ;

>> sleep 10

>> pcs resource cleanup openstack-glance-registry-clone ;

>> sleep 10

>> pcs resource cleanup httpd-clone ;

>>

>> # . ./start.sh

>>

>> In worse case scenario I have to issue start.sh twice from different

>> Controllers

>> pcs resource cleanup openstack-nova-api-clone attempts to start

>> corresponding

>> service , which is down at the moment. In fact two cleanups above start all

>> Nova Services && one neutron cleanup starts all neutron agents as well.

>> I was also kept track of Galera DB via `clustercheck`

>>

>> Thanks.

>> Boris

>>>

>>

>>

>> I guess we're hitting a version problem here: if you deploy the actual

>> master (i.e. with quickstart) you'll get the environment with the

>> constraints limited to the core services because of [1] and [2] (so none

>> of the mentioned services exists in the cluster configuration).

>>

>> Hope this helps,

>>

>> [1] https://review.openstack.org/#/c/314208/

>> [2] https://review.openstack.org/#/c/342650/

>>

>> --

>> Raoul Scarazzini

>> rasca@redhat.com

>>

>> On 08/08/2016 14:43, Wesley Hayutin wrote:

>>> Attila, Raoul

>>> Can you please investigate this issue.

>>>

>>> Thanks!

>>>

>>> On Sun, Aug 7, 2016 at 3:52 AM, Boris Derzhavets

>>> <bderzhavets@hotmail.com <mailto:bderzhavets@hotmail.com>> wrote:

>>>

>>> TripleO HA Controller been installed via instack-virt-setup has PCS

>>> CLI like :-

>>>

>>> pcs resource cleanup neutron-server-clone

>>> pcs resource cleanup openstack-nova-api-clone

>>> pcs resource cleanup openstack-nova-consoleauth-clone

>>> pcs resource cleanup openstack-heat-engine-clone

>>> pcs resource cleanup openstack-cinder-api-clone

>>> pcs resource cleanup openstack-glance-registry-clone

>>> pcs resource cleanup httpd-clone

>>>

>>> been working as expected on bare metal

>>>

>>>

>>> Same cluster been setup via QuickStart (Virtual ENV) after bouncing

>>> one of controllers

>>>

>>> included in cluster ignores PCS CLI at least via my experience (

>>> which is obviously limited

>>>

>>> either format of particular commands is wrong for QuickStart )

>>>

>>> I believe that dropping (complete replacing ) instack-virt-setup is

>>> not a good idea in general. Personally, I believe that like in case

>>> with packstack it is always good

>>>

>>> to have VENV configuration been tested before going to bare metal

>>> deployment.

>>>

>>> My major concern is maintenance and disaster recovery tests , rather

>>> then deployment itself . What good is for me TripleO Quickstart

>>> running on bare metal if I cannot replace

>>>

>>> crashed VM Controller just been limited to Services HA ( all 3

>>> Cluster VMs running on single

>>>

>>> bare metal node )

>>>

>>>

>>> Thanks

>>>

>>> Boris.

>>>

>>>

>>>

>>>

>>>

>>> ------------------------------------------------------------------------

>>>

>>>

>>>

>>> _______________________________________________

>>> rdo-list mailing list

>>> rdo-list@redhat.com <mailto:rdo-list@redhat.com>

>>> https://www.redhat.com/mailman/listinfo/rdo-list

>>> <https://www.redhat.com/mailman/listinfo/rdo-list>

>>>

>>> To unsubscribe: rdo-list-unsubscribe@redhat.com

>>> <mailto:rdo-list-unsubscribe@redhat.com>

>>>

>>>